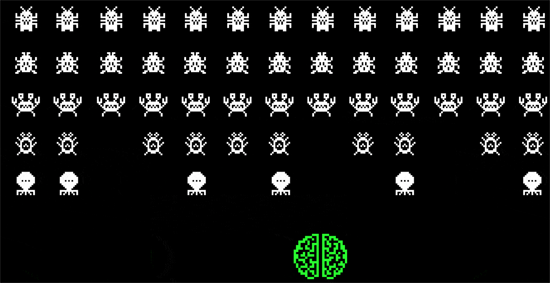

As hackers get smarter and more determined, artificial intelligence is going to be an important part of the solution

By Adam Janofsky | The Wall Street Journal

As corporations struggle to fight off hackers and contain data breaches, some are looking to artificial intelligence for a solution.

They’re using machine learning to sort through millions of malware files, searching for common characteristics that will help them identify new attacks. They’re analyzing people’s voices, fingerprints and typing styles to make sure that only authorized users get into their systems. And they’re hunting for clues to figure out who launched cyberattacks—and make sure they can’t do it again.

“The problem we’re running into these days is the amount of data we see is overwhelming,” says Mathew Newfield, chief information-security officer at Unisys Corp. UIS 1.99% “Trying to analyze that information is impossible for a human, and that’s where machine learning can come into play.”

The push for AI comes as companies face a huge increase in threats and more-sophisticated criminals who can often draw on nation-states for resources. More than 121.6 million new malware programs were discovered in 2017, according to a report by German research institute AV-Test GmbH. That is equivalent to about 231 new malware samples every minute.

Of course, nobody thinks AI is the cure-all for stopping threats. New operating systems and software updates introduce unpredictable risks, and hackers adopt new tactics.

“Is AI a silver bullet? Absolutely not,” says Koos Lodewijkx, vice president and chief technology officer at IBM Security. “It’s a new tool in our toolbox.”

Because of those limitations, reliance on algorithms “is a little concerning and in some cases even dangerous,” says Raffael Marty, vice president of corporate strategy at cybersecurity firm Forcepoint, which is owned by defense contractor Raytheon Co. RTN 1.32%

Still, most cybersecurity experts believe that AI can do a lot more good than harm as hackers get smarter and more determined. Here are a few examples of how cybersecurity pros are using artificial intelligence—and what’s next for the technology.

Detecting malware

Traditionally, security systems look for malware by watching for known malicious files and then blocking them. But that doesn’t work for zero-day malware—threats that are unknown to the security community.

AI is helping to solve that problem and identify new attacks as soon as they appear. The systems analyze existing malware and see what characteristics the files have in common, then check to see if potential new threats have any of those traits, says Avivah Litan, a cybersecurity analyst at Gartner Inc.

Enlisting AI

*Few organizations show no interest in deploying AI for cybersecurity

Yes, we’re already doing this extensively

12%

Yes, we’re already doing this on a limited basis

27%

Yes, we’re adding machine learning to our existing security analytics tools on a test basis

22%

Yes, we’re currently engaged in a project for deployment

12%

Yes, we’re planning a project for deployment

8%

No plans, but we’re interested in deploying

12%

No, we’re not interested

6%

Don’t know |

2%

*Source: Enterprise Strategy Group survey of 412 cybersecurity professionals at organizations with at least 500 employees in North America and Western Europe, conducted in April 2017

That is the method used at security firm CrowdStrike Inc. When a user clicks on a suspicious file, the company’s tool scans hundreds of different attributes—such as the size, content and distribution of code in the file—then runs them through a machine-learning algorithm that compares them to the company’s malware database and determines how likely the file is to be malicious.

“The reason why machine learning works so well for malware is that there’s so much data out there—it’s easier to train the system,” says CrowdStrike’s director of product marketing, Jackie Castelli.

One big hurdle for this approach to identifying malware is false positives: Currently, some AI systems classify a lot of benign programs as threats, which is a big problem given how many attacks companies face and how much time it can take to investigate each lead. But most security vendors that focus on laptops, mobile phones and other devices are working on the problem, Ms. Litan says.

Getting detailed data on users

Organizations in a range of fields, including government, retail and finance, are trying to keep unauthorized users out of their systems by combining machine learning with biometrics—studying physical information about users, like fingerprints and voices.

With biometric systems, people access services by talking or scanning a part of their body instead of entering a username and password. Machine learning can be used to analyze small differences in these characteristics and compare them to data on file, making verification precise.

For instance, financial-services firms such as Fidelity Investments, JPMorgan Chase JPM -0.66% & Co. and Charles Schwab Corp. SCHW -0.44% have deployed biometric technology for customer service that scrutinizes hundreds of voice characteristics, such as the rhythm of speech.

When voice and behavioral data are combined, the system is precise enough to tell identical twins apart, says Brett Beranek, director of security strategy at Nuance. That is because characteristics such as vocabulary and frequency of pauses will differ even if people’s voices sound the same.

One hurdle for biometric technology is selling users on the idea. Researchers at the University of Texas at Austin’s Center for Identity have found that many consumers are wary of biometric authentication because of concerns about privacy, government tracking and identity theft.

Mr. Beranek says many U.S. consumers haven’t been exposed to biometric technology, unlike people in many European countries, and recommends organizations that use it address concerns and questions that customers might have. Many banks, for example, offer online explanations about how it works and how they use encryption to protect stored biometric data.

Sifting through alerts

A typical large corporation receives tens of thousands of security alerts each day warning about possible malware, newly discovered ways to exploit security flaws and ways to remediate threats, according to cybersecurity experts.

So, companies are investing in AI to help determine which alerts are most important, and then automate the responses.

Companies “can’t handle all the security alerts, and they’re missing things that are really important,” says Gartner’s Ms. Litan. “If you look at almost all the data breaches that took place in the last 10 years, there’s a security alert that notified them, but it was buried at the bottom of the list.”

For example, the breach announced by Equifax Inc. in September 2017 was partly blamed on a flaw in the Apache Software Foundation’s Struts software program. A patch for the vulnerability was issued several months before the incident at Equifax occurred, but the company failed to address the issue, The Wall Street Journal reported. The breach compromised personal information belonging to about 147.9 million consumers.

A spokeswoman for Equifax said in an email that the company has since hired a new chief information-security officer and chief technology officer, and has increased its security and technology budget by more than $200 million this year.

About three years ago, International Business Machines Corp. IBM 0.13% started training its Watson AI system on cybersecurity, with the goal of helping security teams manage the influx of threat information. The system combs through alerts, recognizes patterns and determines things such as what malware is involved, whether it is related to previous attacks and whether the company is being specifically targeted. That way, security teams can focus on the most likely threats and put the rest aside.

“We want to use AI to do all the investigative work and essentially give the analyst a researched case,” says Mr. Lodewijkx, adding that IBM determined that its own security analysts spend about 58% of their time doing repetitive work such as studying alerts.

“We’re aiming to take all of that 58% away from the analyst, so they’re able to deal with the uniquely human tasks,” he says.

More than 100 companies currently use Watson for cybersecurity, including Sri Lanka’s Cargills Bank Ltd. and Swiss financial-services provider SIX Group. Like most AI, the technology took years to develop and encountered bumps along the way. For example, Watson at one point concluded that the word “it” was the name of the most dangerous malware, because it appeared so frequently in malware research, Mr. Lodewijkx says.

Tracking down enemies

One common struggle for data-breach victims is figuring out who attacked them, because criminals and nation-state hackers use a number of techniques to obfuscate their identity. Some cybersecurity researchers and analysts believe machine learning can be used to attribute attacks, which can help companies defend against them and prepare for future incidents.

Security systems can mine and analyze information on registries and online databases to find clues about the infrastructure that criminals set up to launch attacks, such as domain names of websites and IP addresses associated with the devices they use for hacking.

When hackers leave all those traces, “you create a behavior footprint that you leave behind that is unique,” says Chris Bell, chief executive of Diskin Advanced Technologies. The firm uses machine learning to analyze these footprints, determine who is behind an attack and who their next victims may be.

The technology is still in the early stages, but customers in the aviation, utility and financial-services industries have used it to spot pending attacks and automatically block IP addresses associated with criminal groups, according to Mr. Bell.

“As artificial intelligence and machine learning continue to develop rapidly, edging closer to becoming general intelligence, artificial intelligence is becoming a tool adaptable to all endeavors. AI will solve more than overtly digital or technological problems. Eventually, artificial intelligence will be useful for solving all the problems that intelligence solves.

“And the capacity to solve problems defines intelligence. We are long past when chess – a realm where humans display genius – has become trivial for machines. Like individual human intelligence, artificial intelligence has matured past playing games and has entered the work force.

“I am excited to see what happens next.”